Prelude

The extraordinary woman who mentored me through graduate school and co-chaired my PhD committee, Dr. Laurie Nelson, frequently talked to me about the idea of “current best thinking.” Characterizing something as your “current best thinking” gives you permission to share where you are in your work while simultaneously making it clear that your thinking will still evolve in the future.

It is critically important to remember that both open education and generative AI are tools and approaches – they’re means to an end, methods for accomplishing a goal or solving a problem. I’m interested in solving problems of access and effectiveness in education. I think open education and generative AI have a lot to offer toward solutions to these problems. But I want to, from the outset, caution all of us (myself included) against becoming enamored with either open education or generative AI in and of themselves. As they say, you should fall in love with your problem, not your solution.

Below is my current best thinking about how open education and generative AI can come together to help us make progress on problems of access and effectiveness. It will definitely evolve in the future.

Introduction

Bozkurt and more than 40 co-authors (Bozkurt, et al., 2024) provide a comprehensive catalog of ways that generative AI might harm education. Their list includes:

Digital Divide and Educational Inequality

GenAI may exacerbate existing inequalities within education:

- Unequal Access: High costs of advanced GenAI tools along with infrastructure requirements limit accessibility for underprivileged students and institutions.

- Widening the Gap: Those with access to premium GenAI services may gain advantages that increase the disparity between wealthy and disadvantaged learners.

- Global Inequities: Developing countries may lack the infrastructure to support GenAI, hindering educational progress.

Commercialization and Concentration of Power

The development and control of GenAI are dominated by a few large corporations, which poses several concerns:

- Profiteering by Big Tech: Companies may prioritize profit over ethical considerations, impacting education negatively.

- Limited Collaboration with Academia: Lack of partnership between tech companies and educational institutions hinders innovation and transparency.

- Monopolization of Knowledge: Concentration of GenAI development within corporations can lead to control over information and educational content.

Lack of Representation

GenAI may reflect and perpetuate societal biases through:

- Western-Centric Perspectives: GenAI models trained predominantly on Western data may not adequately represent global diversity.

- Linguistic Limitations: Disparities between high- and low-resource languages may disadvantage non-English speakers.

- Cultural Homogenization: GenAI may promote a narrow worldview, suppressing cultural differences.

These concerns should sound familiar to those working in open education. These are many of the same issues that open education advocates have raised about proprietary textbooks and other proprietary learning materials for decades. And just as openness has been a powerful tool for combatting these issues with traditional learning materials, openness has an important role to play in addressing these concerns with generative AI.

Understanding LLMs as Course Materials

Over the next several years course materials will likely shift from formats that look more like traditional textbooks toward formats that look more like large language models (LLMs) and other generative AI tools. The shift to these tools, which comes with the risks like those described above, threatens to erode important progress toward affordability, access, and equity made by the open education movement. Understanding that LLMs are course materials can help us think more clearly about what the future of course materials might look like and how and why open continues to be important going forward.

Large educational materials publishers like Pearson, McGraw-Hill, and Cengage spend a significant amount of time and money creating proprietary course materials. Because these products are so expensive and time-intensive to create (sometimes millions of dollars per product), most instructors end up adopting one of these pre-existing resources rather than creating their own.

About 25 years ago, individuals and then organizations began creating openly licensed alternatives to these proprietary products. Large OER publishers like OpenStax, Lumen, and CMU OLI spend a significant amount of time and money creating open content. These OER are significantly more affordable than the proprietary alternatives and, because of their open licensing, can serve as the foundation for a wide range of innovations in teaching and learning.

In the generative AI space, companies like OpenAI, Anthropic, and Google spend a significant amount of time and money creating proprietary LLMs. Because these LLMs are so expensive and time-intensive to create (possibly more than a hundred million dollars per model), most people end up using one of these models rather than creating their own.

A few years ago, organizations began creating openly licensed alternatives to these proprietary LLMs. Organizations like Meta, Mistral, and IBM spend a significant amount of time and money creating LLMs and openly licensing the model weights so that everyone can retain, reuse, revise, remix, and redistribute them. These “foundation” models provide the foundation upon which you can build a wide range of innovations in teaching and learning.

| Creators of Proprietary Course Materials | Creators of Open Course Materials |

| Pearson, McGraw-Hill, Cengage | OpenStax, Lumen, CMU OLI |

| Creators of Proprietary LLMs | Creators of Open LLMs |

| OpenAI, Anthropic, Google | Meta, Mistral, IBM |

A critical insight I’ve gained through painful experience over the last 26 years is this: the majority of faculty won’t adopt OER unless it comes with all the supplemental materials and other quality-of-life improvements provided by traditional publishers – lecture slides, assignments with rubrics, automatically graded homework, quiz banks, etc. (The few faculty who will adopt without these are the ones who enjoy making their own supplemental materials.) We can expect LLM adoption trends to look similar.

Think, for a moment, of LLMs as the “textbooks” of this next phase of course materials. While I’m not ready to predict that OpenAI, Anthropic, and Google will replace Pearson, McGraw-Hill, and Cengage, I am absolutely ready to predict that the large publishers will begin creating bundles of proprietary supplemental materials designed specifically for use with proprietary language models. It’s hard to say exactly what this will end up looking like, but one thing is certain: the difference in the design and format of course materials pre- and post-generative AI will be even more dramatic than the difference between the design and format of course materials pre- and post-internet.

Rather than waiting to act until after proprietary generative AI tools have become widely adopted in the course materials market and significant effort is required to displace them, we should take the initiative now to ensure that instructors who want to use LLMs as course materials have access to high quality, openly licensed options from the start. Those options should include both the models themselves and the additional resources necessary to use them easily and effectively. Creating and sharing Open Educational Language Models, or OELMs (pronounced “elms”), is one example of a proactive step we can take to ensure that generative AI tools can move us forward on affordability, access, and equity instead of backward. (There are, doubtless, many other steps that could be taken. This is the one I’m currently working on.)

Open Educational Language Models

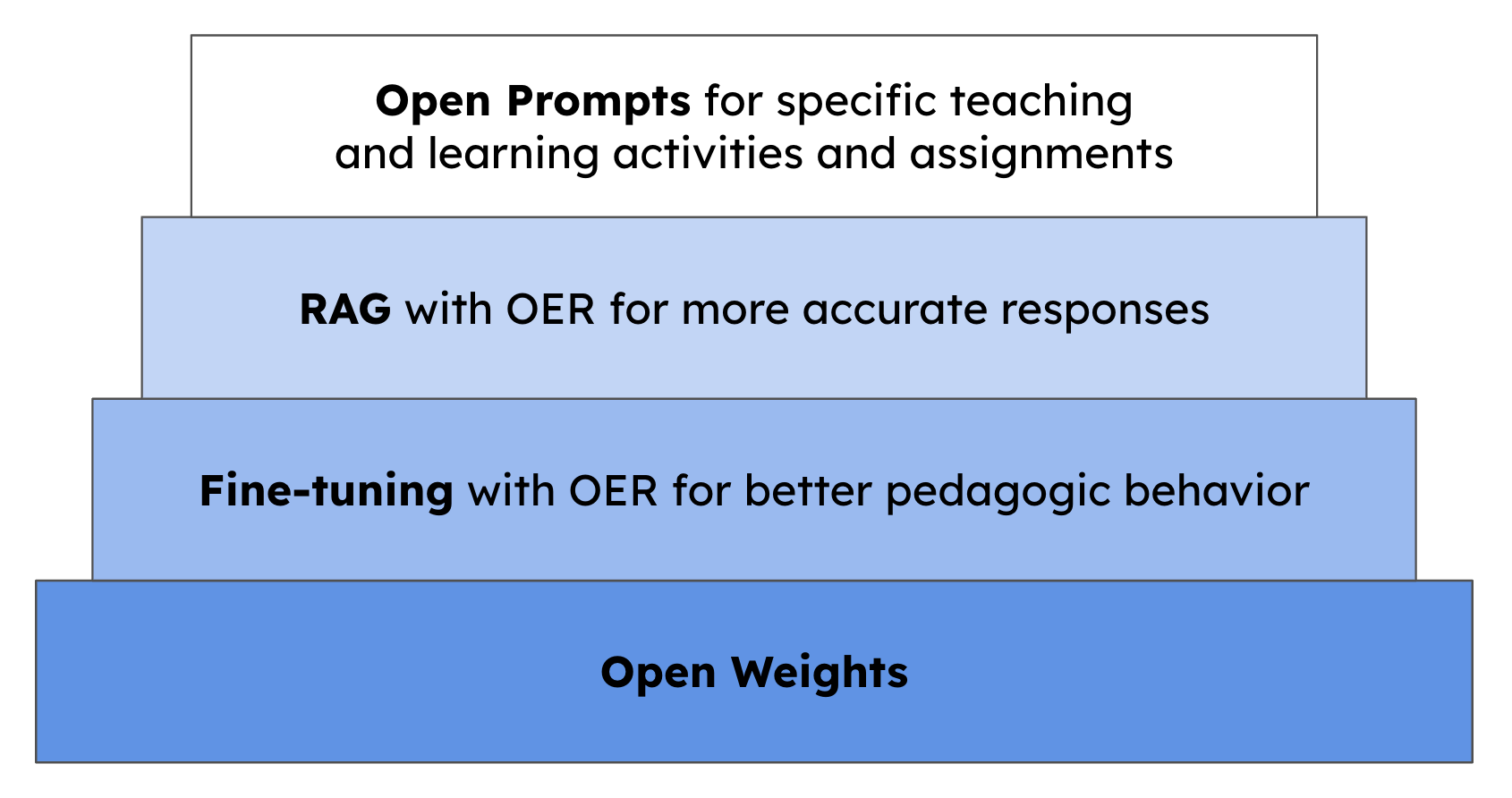

Open Educational Language Models (OELMs) bring together a collection of openly licensed components that allow an openly licensed language model to be used easily and effectively in support of teaching and learning.

The foundational component of an OELM is a set of model weights, which are the “brains” of a language model. High quality, openly licensed model weights have been created and shared by Meta, IBM, Mistral, Alibaba, and many others, and cost tens of millions of dollars – maybe over one hundred million dollars – to create. If we use them exactly as we find them, interacting with these models may indeed result in some of the harms described above. But because the model weights are open, we have the opportunity to revise and remix them. Because the model weights are open, we can change the way learners and teachers interact with them in order to increase access, affordability, and equity. Because the model weights are open, we have significantly greater agency.

In an OELM, the open model weights are supplemented by other components that make them easier to use, that address key concerns about generative AI in education, and that answers questions including:

- How do I use it effectively?

- Can I trust its responses to be accurate?

- Can I trust its behavior to be appropriate?

How do I use it effectively? An OELM includes a comprehensive collection of pre-written prompts. These prompts are designed to support a wide range of activities. For learners, these activities might include debates, arguments, or dialogues, open-ended exploration of concepts and ideas, asking clarifying questions and receiving individualized answers, and engaging in interactive review with immediate, diagnostic feedback. For teachers, these activities might include lesson planning, designing an active learning exercise for use in class, differentiating instruction, revising or remixing OER, and drafting feedback on student work.

Can I trust its responses to be accurate? An OELM includes a curated collection of OER that the model uses for retrieval augmented generation (RAG). RAG is a process by which models’ responses are made more accurate, and works as follows. When a teacher or learner submits a prompt to the model, before the prompt is sent to the model, relevant information is searched for in the collection of OER and added to the prompt. The model then uses the information it has retrieved from the OER as the basis for its response to the user, augmenting its general knowledge about the topic before generating a response. Conceptually, this is similar to the way a librarian might refer to a reference work before answering a question.

Can I trust its behavior to be appropriate? An OELM includes a specially designed collection of open content that can be used to steer the model’s behavior. This can be embedded in the system prompt (a prompt which the user doesn’t see but which steers model behavior in the background) or used for fine-tuning. Fine-tuning is the process by which a model’s behavior is permanently changed in some desired way. In the OELM context, fine-tuning is the process by which a model can be made to behave more pedagogically. For example, a model fine-tuned to behave as a helpful customer service agent (like ChatGPT was) answers student questions directly, whereas a model fine-tuned to behave more pedagogically might ask students additional questions or provide hints before giving an answer. Conceptually, fine-tuning a model is similar giving a knowledgeable graduate student a few hours of training so they can be a more effective tutor.

Each of these four components – the model weights, content for fine-tuning, content for RAG, and pre-written prompts – can be openly licensed, providing teachers, learners, and others with permission to engage in the 5R activities. Think of the model weights as the core textbook and the other components as the supplemental materials necessary for widespread adoption. And just like with traditional OER, the ability to copy, edit, and share prompts and other OELM components means they can be localized in order to best meet the needs of individual learners, minimize the potential harms associated with generative AI, and increase access, affordability, and equity.

Components of an Open Educational Language Model (OELM)

Components of an Open Educational Language Model (OELM)

Running OELMs Locally

The foundational R in the 5Rs framework is Retain – to take advantage of an educational resource being openly licensed, you have to be able to download your own copy of the resource. Then you can take that copy you downloaded and revise, remix, reuse, and redistribute it to meet your needs and the needs of others around you. (You’ll note that there’s no “large” in Open Educational Language Model. That’s because small models are a key to this strategy over the medium to long-term.)

There is an active community (e.g., Ollama, LM Studio, llama.cpp, etc.) working to make it easy to download open weights models and run them on consumer hardware. Right now (in late 2024), there are already many open weights models that can be run on desktop computers, laptops, and even smartphones without any connection to the internet. For example, for my Substack Reviewing Research on AI in Education, I created an agent (powered by a Llama 3.1 open weights model running locally on my laptop via Ollama) that reads 150 – 300 abstracts each morning and recommends the best 3 – 5 for me to review further.

Advances in running models locally are important because people without reliable access to the internet are currently unable to take advantage of generative AI in support of teaching and learning. However, the capability to run models locally means that an OELM can be downloaded to a thumb drive and sent wherever a suitable device is available – and then used without regard for internet connectivity. This dramatically increases the number of people who can benefit from generative AI in support of teaching and learning.

The ability to run OELMs locally also addresses a number of concerns people have about generative AI. For example, many people are concerned about data privacy and how their data are used by the providers of proprietary generative AI models. However, when a model runs locally on the user’s device the user’s data never leaves their device – meaning the proprietary providers never have access to the user’s data. Running smaller models on local devices also addresses concerns about the amount of power, water, and other resources consumed by the huge data centers that serve AI models centrally.

And finally, the ability to download OELMs and run them locally provides people with the ability to engage in the 5R activities. You can download the model weights – as well as the other components – and run them locally just as you found them, or revise and remix them first. Empowering teachers and learners to more fully exercise their agency with regard to LLMs will be key to combining the power of open and the power of generative AI in the service of improving access, affordability, and equity over the long-term.

Conclusion

As the course materials market begins the transition toward more AI-powered products and offerings, openness is more important than ever for those of us who care about increasing access, affordability, and equity. There are critical lessons we need to learn about how to leverage AI effectively in the service of teaching and learning, and how the added agency that comes with openness can help us do that even more powerfully. I’m super excited to begin learning these lessons. Let me know if you’re interested in collaborating on this line of work – I’m making plans for spring term 2025.

You must be logged in to post a comment.